Nowadays, the most of the Java microservices and even many Java application are packaged and deployed as Docker containers. Everyone is enjoying (I hope 😆) the Docker experience, compared to the traditional VMs. But, the Docker containerization will not come alone.. nothing is autonomous 😁 So, there are many concerns to take into consideration while containerizing Java applications, like playing with the JVM.

The Docker racing was accelerated by the presence of Kubernetes in the market, which is coming with an amazing set of great features.

One of the great features available for handling the containers is having the option to control the resources allocated for a Pod 😆 for example:

| |

This yaml resource descriptor will define CPU and Memory limits for the two containers running in this frontend Pod. These limits was not recognized by the JVM under Java versions until 9 😭😭With the appearance of the Java 10, the JVM can now be aware of these limits. 🥳

This great support was even ported to the JDK 8 update 191.

what are these great Improvements for Docker Containers ?

We can quickly start testing the awareness of the JVM under Java 10 of the Container limits that we can put:

Let’s create a Docker container without any limits. In the created container, we will be running the JShell to test what information do we have in the Runtime?

| |

Before digging into the returned values, what are these methods ?

Based on the Javadoc of the Runtime class:

- totalMemory() Gives the total amount of memory in the Java virtual machine. The value returned by this method may vary over time, depending on the host environment. Note that the amount of memory required to hold an object of any given type may be implementation-dependent. Returns: the total amount of memory currently available for current and future objects, measured in bytes.

- maxMemory() Gives the maximum amount of memory that the Java virtual machine will attempt to use. If there is no inherent limit then the value Long.MAX_VALUE will be returned. Returns: the maximum amount of memory that the virtual machine will attempt to use, measured in bytes

Results:

- Total Memory: 33554432 bytes = 32 MB 👈 total allocated space reserved for the JVM

- Max Memory: 524288000 bytes = 500 MB 👈 the maximum amount of memory that the JVM can use

- Available CPUs: 8

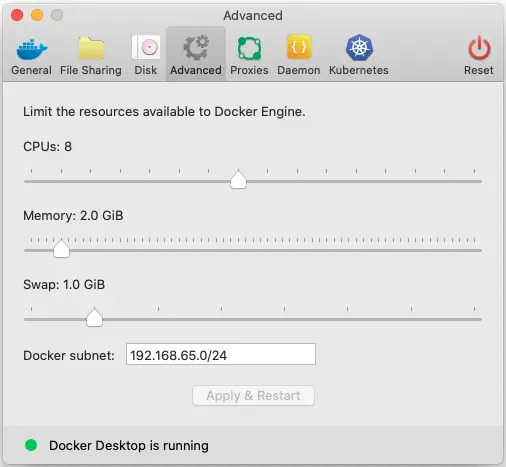

Let’s see my Docker Engine configuration on my laptop:

Docker Engine configuration

Docker Engine configuration

You can see that there are the 8 CPUs listed by the JShell, but the Memory allocated for the Docker Engine is 2GB and not 500MB 🤔 Why it’s only 25% of the available memory? 😤

Let’s do the same, with a Docker container with Memory limit of 800M and a CPU limit of 2:

| |

Results:

- Total Memory: 13.5625 MB

- Max Memory: 193.375 MB

- Available CPUs: 2

The Max Memory is approximately 25% of the 800MB memory that we allocated to our container 🤔

We can run an other command to check the maximum memory allocated for the JVM:

| |

You can see here, that the MaxHeapSize is equal to 209715200 Bytes = 200 MB 😁 more precisions 🥳 But again, why it’s only 25% of the available memory? 😤

This is a default Java 10+ behavior, the JVM Heap will get 25% of the container’s memory. This is why we got only 25% of the allocated memory. 😭

Don’t worry ! There is a way to override the default 25% 🧐 we can pass a -XX:MaxRAMPercentage parameter with the desired value. Let’s test it:

| |

When we defined the MaxRAMPercentage to 50%, we got 419430400 Bytes = 400MB, which is 50% of the 800M limit memory defined for the container.

| |

Cool ! 🥳 The -XX:MaxRAMPercentage parameter is also available on Java 8 starting from the update 191. But, it accepts only decimal value:

| |

🥳 In this Java 8 based-container, we got the same MaxHeapSize value as the Java 10 container 😁

⚠️ Important Note: it’s true that we can define the -XX:MaxRAMPercentage parameter to 100% but it’s not recommended and it’s even dangerous 😱 but guess why ?

Imagine a situation: we want to deploy on our Kubernetes cluster a Java 10 based Docker image containing a Java application with a memory limit defined to 1GB. When the Pod will be created, it will have 1GB memory allocated to it. If we will have the -XX:MaxRAMPercentage parameter defined to 100%, the Java application running in the container can be consuming the total amount of the memory, which is the 2GB. In this case, the Pod will not have enough resources to have any Shell session, or even to be able to communicate with the kube api-server, etc.. So, the Kube Master will consider that this Pod is dead or unhealthy when it became unreachable.. so the Master will create a new Pod, and will kill the old one.. and when we will get the memory saturation.. a new Pod will be created and it will discard a the older one.. infinite loop 🥵

💡A golden tip for defining the -XX:MaxRAMPercentage parameter to 75% only.. to let some space for the Pod internal processes 😎

There are other two JVM options have been added to allow Docker container users to gain more fine grained control over the amount of system memory that will be used for the Java Heap:

-XX:InitialRAMPercentage: Set initial JVM Heap size as a percentage of the total memory-XX:MinRAMPercentage: Set the minimal JVM Heap size as a percentage of the total memory

Another recommended JVM option to use is the HeapDumpOnOutOfMemoryError which is used to tell the Java HotSpot VM to generate a heap dump when an allocation from the Java heap or the permanent generation cannot be satisfied. There is no overhead in running with this option, so it can be useful for production systems where the OutOfMemoryError exception takes a long time to surface. When the java.lang.OutOfMemoryError exception is thrown, a heap dump file is created. By default the heap dump is created in a file called java_pidpid.hprof in the working directory of the VM. We can specify an alternative file name or directory with the -XX:HeapDumpPath= option.

For example -XX:HeapDumpPath=/disk2/dumps will cause the heap dump to be generated in the /disk2/dumps directory.

Before the end

The JVM options that we already saw can be integrated to the Dockerfile in the ENTRYPOINT Java command. But, this will require building an other image each time we want to change the values of the JVM options.

A great solution is to integrate this JVM options in the JAVA_OPTS environment variable in the Kubernetes Deployment , which looks like:

| |

That’s all tale ! 😁

Java support for Docker Containers is a great new feature that comes with Java 10 (even ported to Java8 u191). It’s a very useful option in the most of the Java application deployed to Kubernetes 🥳